Key Insights

Deploying Code Llama with Openllm enables efficient, production-ready code generation using scalable APIS and GPU acceleration. Openllm streamlines serving, monitoring, and versioning, making it ideal for integrating Code Llama into real-world developer tools like autocompletion or code review systems.

Integrating AI-powered coding assistants like Code Llama into production environments represents a significant leap forward in software development efficiency. However, successfully deploying these tools requires careful planning and execution to realise their full potential while mitigating risks. This guide provides a step-by-step approach to implementing Code Llama in your production workflow using Openllm for model serving and NexaStack for infrastructure management.

Unlike experimental or small-scale implementations, production deployments demand robust solutions that address scalability, security, and maintainability. We'll explore the technical implementation and the organisational changes needed to maximise the benefits of AI-assisted development while maintaining code quality and team effectiveness.

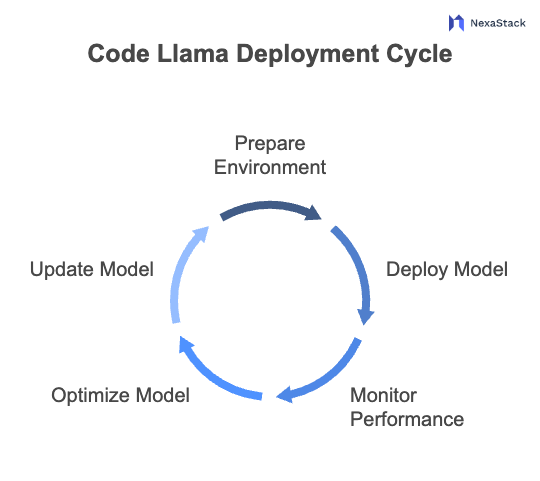

Figure 1: Code Llama Deployment Cycle

Figure 1: Code Llama Deployment CycleImpact Analysis: Understanding the Full Implications

Before integrating Code Llama into your development workflow, conducting a comprehensive impact analysis that goes beyond surface-level productivity gains is essential. While AI-generated code can significantly accelerate development, its broader implications—ranging from technical debt accumulation to team dynamics—must be carefully evaluated to ensure sustainable long-term benefits.

Development Velocity vs. Technical Debt: Striking the Right Balance

One of Code Llama's most compelling advantages is its ability to boost development speed, allowing teams to prototype and iterate faster. However, this acceleration can come at a hidden cost. Without proper oversight, AI-generated code may introduce suboptimal patterns, redundant logic, or security vulnerabilities that surface later in the development lifecycle.

To mitigate this risk, teams should:

- Establish pre-implementation benchmarks for both delivery speed and code quality.

- Implement automated code review tools to detect potential technical debt early.

- Schedule regular refactoring sprints to address AI-generated code that may not meet long-term maintainability standards.

By proactively managing these factors, organisations can enjoy the benefits of rapid development without sacrificing code integrity.

Code Consistency and Style Guide Adherence: Ensuring Uniformity

A well-maintained codebase thrives on consistency. Many development teams enforce strict style guides and best practices to ensure readability and maintainability. However, AI-generated code does not always align with these standards out of the box.

To maintain uniformity:

-

Enhance linting rules to catch deviations from team conventions.

-

Use custom-trained models (if possible) to better align with organisational coding patterns.

-

Conduct peer reviews specifically on AI-generated code to ensure it meets internal standards.

Without these safeguards, teams risk a fragmented codebase where human-written and AI-generated code follow different conventions, increasing cognitive load for developers.

Knowledge Retention and Team Dynamics: Preserving Expertise

While Code Llama can serve as a powerful assistant, over-reliance on AI-generated code may inadvertently weaken a team’s collective expertise. Junior developers, in particular, might skip deep dives into fundamental concepts if they consistently rely on AI suggestions.

To counteract this:

- Pair AI-assisted coding with mentorship programs to ensure knowledge transfer.

- Encourage developers to document AI-generated logic to reinforce understanding.

- Implement "AI-free" coding exercises to keep foundational skills sharp.

Security and Compliance: Hidden Risks in AI-Generated Code

Another critical consideration is security. AI models can inadvertently introduce vulnerabilities if trained on flawed or outdated examples. Teams working in regulated industries must ensure AI-generated code complies with industry standards (e.g., HIPAA, GDPR, SOC 2).

Best practices include:

-

Running automated security scans on AI-generated code.

-

Conducting manual security audits for sensitive components.

-

Maintaining clear audit trails to track AI contributions in critical systems.

Implementation Strategy: Building a Sustainable Foundation

Infrastructure Requirements and Planning

Deploying Code Llama in production requires careful infrastructure planning. The model's resource requirements can vary significantly depending on usage patterns. We've found that teams typically underestimate:

-

Memory requirements for concurrent users

-

GPU utilisation during peak periods

-

Network bandwidth for model inference

A phased rollout allows you to monitor these factors and scale resources appropriately. Start with a small group of power users before expanding to the entire team.

Version Control Integration Strategy

Integrating Code Llama with your version control system requires more thought than simply enabling an API connection. Consider:

-

How to attribute AI-generated code in commits

-

Whether to implement pre-commit validation hooks

-

How to handle large-scale refactoring suggestions

We recommend initially creating a separate branch policy for AI-assisted development, which will allow for careful review before merging into the main branches.

Performance Benchmarking

Before full deployment, conduct thorough performance benchmarking:

-

Measure baseline development metrics (story points completed, PR cycle times)

-

Establish control groups using traditional development methods

-

Compare results across multiple sprint cycles

This data will help you quantify the impact and identify any unexpected bottlenecks in your workflow.

Integration Framework: Making AI a Seamless Part of Your Workflow

IDE Integration Best Practices

While most teams focus on basic IDE plugin installation, truly effective integration requires deeper customisation:

-

Configure context-aware prompting based on the current file type

-

Establish project-specific prompt templates

-

Implement keyboard shortcuts for everyday AI interactions

These minor optimisations can significantly reduce friction in daily usage.

CI/CD Pipeline Adjustments

Your continuous integration pipeline will need modifications to handle AI-generated code effectively:

-

Add specialised static analysis rules for AI outputs

-

Implement differential testing for critical paths

-

Consider adding an AI-generated code validation step

These changes help maintain quality while accommodating the unique characteristics of AI-assisted development.

Monitoring and Feedback Loops

Establish comprehensive monitoring to track:

-

Model performance metrics (latency, accuracy)

-

Developer satisfaction and adoption rates

-

Code quality trends over time

Regular retrospectives with the development team can surface valuable insights for continuous improvement.

Governance and Security: Managing Risk in AI-Assisted Development

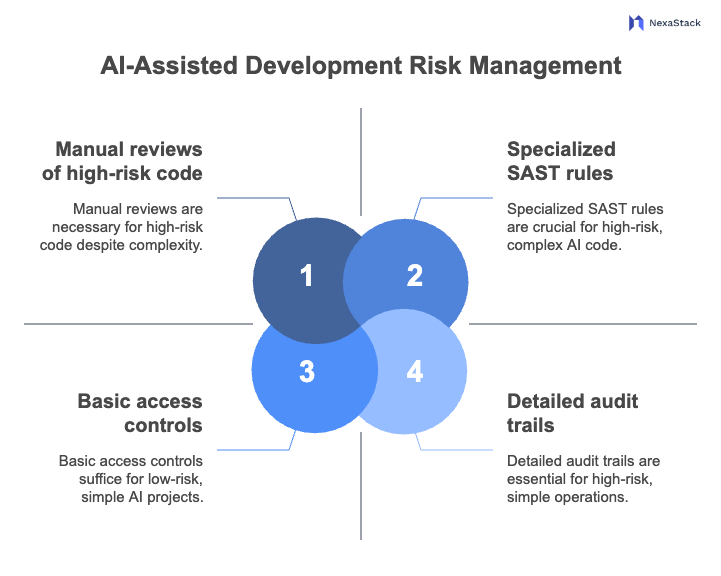

Figure 2: Managing Risk in AI-Assisted Development

Figure 2: Managing Risk in AI-Assisted DevelopmentIntellectual Property Considerations

AI-generated code raises important IP questions:

-

Who owns the copyright to AI-assisted code?

-

How does this affect your open-source compliance?

-

Are there licensing implications for generated code?

Consult with legal experts to establish clear policies for your organisation.

Security Review Processes

Traditional security reviews may not catch all AI-specific vulnerabilities:

-

Implement specialised SAST rules for AI-generated code

-

Conduct manual reviews of high-risk generated code

-

Monitor for unusual dependency introductions

Access Control and Audit Trails

Granular access controls are essential:

-

Restrict model access based on project needs

-

Maintain detailed logs of AI interactions

-

Implement approval workflows for sensitive operations

Performance Metrics: Measuring What Matters

Quantitative Metrics

While traditional metrics like lines of code remain relevant, consider adding:

-

AI suggestion acceptance rates

-

Time saved on repetitive tasks

-

Bug introduction rates compared to human code

Qualitative Measures

Don't neglect subjective factors:

-

Developer satisfaction surveys

-

Code review feedback quality

-

Onboarding time for new team members

Conclusion: Implementing AI-Assisted Development Responsibly

Successfully deploying Code Llama in production requires more than technical implementation—it demands a holistic approach considering how AI will integrate with your existing processes, team dynamics, and organisational culture. While the potential benefits are significant—from accelerated development cycles to reduced repetitive work—realising these gains requires careful planning, continuous monitoring, and a commitment to responsible adoption.

One of the most critical lessons from early adopters is that AI-assisted development works best when it complements rather than replaces human expertise. The most successful implementations treat Code Llama as a collaborative partner rather than an automation tool. Developers who engage critically with AI suggestions—questioning, refining, and contextualising the outputs—achieve better results than those who accept them uncritically. This mindset shift is perhaps the most important cultural change organisations must foster.

Looking ahead, we anticipate several key developments in this space. First, we'll see tighter integration between AI coding assistants and other development tools, creating more seamless workflows. Second, expect more sophisticated customisation options, allowing teams to fine-tune models to their specific codebases and domain requirements. Finally, as the technology matures, we'll develop better metrics and methodologies for evaluating the actual impact of AI assistance on software quality and team productivity.

Next Steps with Openllm

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.