Key Insights

Secure and Private DeepSeek Deployment ensures your AI workloads run in isolated, encrypted environments with strict access controls. Designed for enterprises, it enables compliance with data privacy regulations while maintaining performance and scalability. By keeping sensitive data on-premises or in a trusted cloud setup, organisations can harness DeepSeek’s capabilities without compromising security.

Performance Benchmarks and Optimisation

Resource Utilisation Metrics

Real-time monitoring of:

-

GPU/CPU usage for AI inference, ensuring efficient resource allocation.

-

Memory, disk I/O, and caching optimisation for seamless large-scale processing.

Latency and Throughput Analysis

-

Optimised model inference techniques for ultra-low-latency interactions.

-

Scalability testing to maintain high-throughput processing under peak loads.

Scaling Strategies for High-Demand Scenarios

-

Auto-scaling techniques are implemented to manage performance for workloads that fluctuate.

-

Cloud resource utilisation mixed with on-premises infrastructure for hybrid deployments.

These optimisations ensure cost-efficient, high-performance AI deployments that scale with enterprise demands.

Real-World Implementation Case Studies

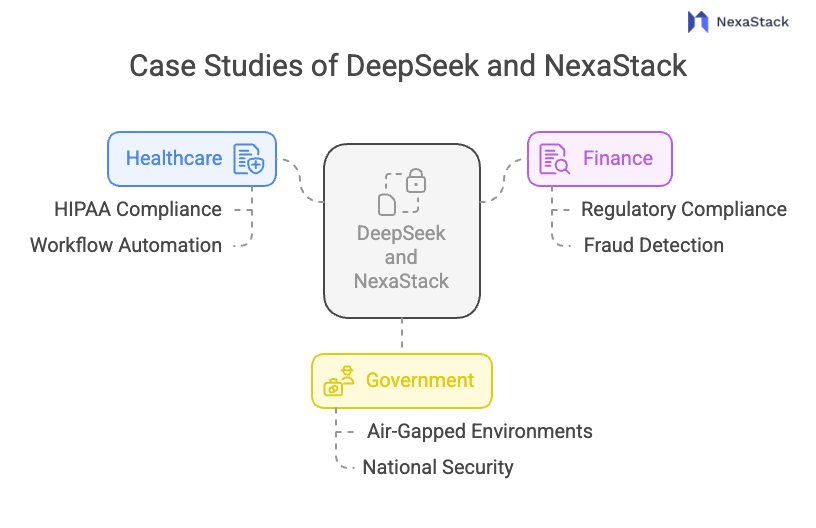

Fig 2: Case Studies of DeepSeek

Fig 2: Case Studies of DeepSeek Healthcare: Secure Patient Data Processing

Hospitals use DeepSeek to automate the analysis of medical documents. This improves their workflows and encrypts medical records. NexaStack's security framework is HIPAA compliant, meaning sensitive healthcare information will never be exposed to data breaches and malicious users.

Finance: Confidential Document Analysis

Banks automate and deploy Deepseek for contract analysis, risk appraisal, and fraud detection. This enhances finances and economic activity while also improving operational efficiency. NexaStack meets regulatory compliance, end-to-end encryption, and other financial requirements, such as GDPR and PCI DSS, first for different financial institutions.

Government: Classified Information Management

NexaStack allows government institutions to conduct secure automated intelligence analysis within air-gapped environments. This enables them to process classified information. This model enhances national security because non-tribal access or cyber threats to strategically sensitive information will be denied.

Troubleshooting Common Deployment Challenges

Resolving Performance Bottlenecks

-

Determine GPU allocations and inference settings based on the hardware constraints.

-

Utilise vertical and horizontal scaling to manage workloads of greater magnitude.

Addressing Security Vulnerabilities

-

Conduct regular penetration testing to detect and mitigate potential security risks.

-

Enforce multi-factor authentication (MFA) and role-based access controls for AI model security.

Updating and Maintaining Your Deployment

-

Automates security updates, patch management, and model retraining to optimise deployments.

-

Maintain a continuous monitoring system for AI performance, resource utilisation, and anomaly detection.

Upcoming NexaStack Features for DeepSeek

-

Enhanced model governance tools for improved compliance, AI lifecycle management, and better control over fine-tuning and model updates.

-

Advanced real-time monitoring and analytics to optimise performance, detect anomalies, and enhance proactive system management for enterprise AI deployments.

As privacy-first AI solutions continue to gain traction, NexaStack is positioned to become the gold standard for secure enterprise AI deployment with cutting-edge security measures. Organisations aiming for a private, efficient, and regulation-compliant DeepSeek deployment can start with NexaStack today!

Figure 1: Understanding DeepSeek AI Model

Figure 1: Understanding DeepSeek AI Model